Machine learning models don't work out-of-the-box with maximum performance. Their accuracy and generalization depend heavily on hyperparameters — the configuration settings that control how a model learns. Unlike parameters (which the model learns from data), hyperparameters must be set manually or optimized through tuning.

Getting these settings right is often the difference between a model that barely works and one that delivers production-ready insights. This guide explores the essentials of hyperparameter tuning, practical techniques, and real-world applications.

What Are Hyperparameters?

Hyperparameters are settings that define the structure and learning process of machine learning models. They are not learned from data but chosen before training.

Examples include:

Why Hyperparameter Tuning Matters

Choosing poor hyperparameters can lead to:

Tuning ensures that the model balances bias and variance while maximizing performance.

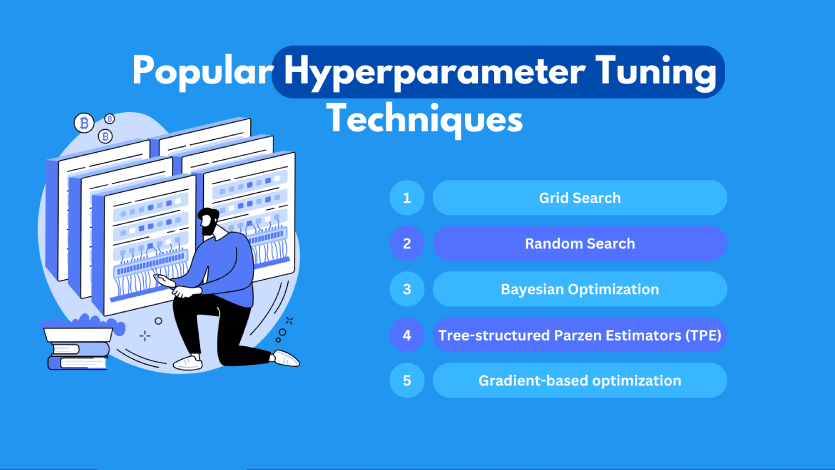

Common Hyperparameter Tuning Techniques

Grid Search

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

param_grid = {

'n_estimators': [100, 200, 300],

'max_depth': [None, 10, 20]

}

grid = GridSearchCV(RandomForestClassifier(), param_grid, cv=5)

grid.fit(X_train, y_train)

print(grid.best_params_)

Random Search

Bayesian Optimization

Hyperband

Automated Tools

Comparison Table: Hyperparameter Tuning Methods

| Method | Strengths | Weaknesses | Best For |

|---|---|---|---|

| Grid Search | Exhaustive, guarantees best in search space | Very slow, expensive for large spaces | Small parameter spaces |

| Random Search | Faster, explores large spaces | No guarantee of optimal solution | High-dimensional problems |

| Bayesian Optimization | Efficient, guided search | Complex to implement | Expensive training tasks |

| Hyperband | Resource-efficient, stops poor models early | Implementation complexity | Large-scale experiments |

| Automated Tools | User-friendly, integrates with ML frameworks | Less control, may hide details | Rapid experimentation |

Best Practices for Hyperparameter Tuning

Real-World Examples

Conclusion

Hyperparameter tuning is the backbone of building high-performing machine learning models. While it can be computationally expensive, strategic use of techniques like Random Search, Bayesian Optimization, or Hyperband can drastically cut time and cost.

By following best practices and leveraging modern tools, practitioners can transform a "good enough" model into one that performs at scale with precision.