Artificial Intelligence (AI) has made huge strides in recent years, powering everything from recommendation systems to medical diagnostics. Yet, one of the biggest concerns remains: trust. How can we rely on decisions made by AI when we don't understand how those decisions were made?

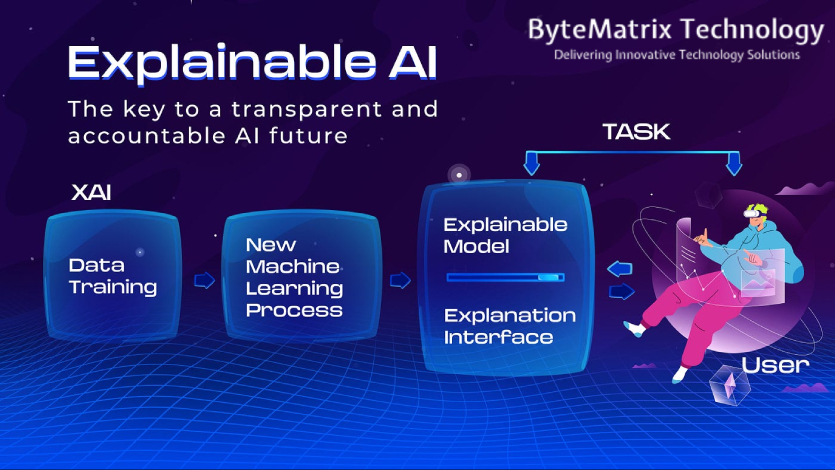

This is where Explainable AI (XAI) comes in. It aims to make the "black box" of machine learning more transparent, interpretable, and accountable. By ensuring models are not just accurate but also understandable, XAI bridges the gap between raw computational power and human trust.

What is Explainable AI?

Explainable AI refers to a set of methods and techniques that allow humans to understand and interpret the results generated by machine learning models. Instead of just giving an output, XAI provides insights into why and how the model reached that decision.

For example:

Why Explainability Matters

AI is increasingly being used in critical areas — healthcare, finance, hiring, criminal justice, and more. Lack of explainability can lead to:

Explainability ensures accountability, fairness, and confidence in AI systems.

Techniques for Explainable AI

Different methods exist to shed light on complex models. Some common ones include:

These methods don't just provide results, they explain reasoning.

Real-World Applications of XAI

By applying XAI, industries can make AI not only smarter but also responsible.

Challenges of Explainable AI

While XAI is promising, it comes with hurdles:

The goal is to find a balance — making explanations meaningful and actionable without overwhelming users.

Comparison Table: Traditional AI vs Explainable AI

| Aspect | Traditional AI | Explainable AI |

|---|---|---|

| Transparency | Often a "black box" | Provides clear reasoning for outputs |

| Trust | Hard to build due to lack of clarity | Increases user confidence and adoption |

| Compliance | Struggles with regulations like GDPR | Supports regulatory requirements |

| Bias Detection | Difficult to identify hidden biases | Can highlight and reduce unfairness |

| Use Cases | Effective for predictions but less accountable | Essential in healthcare, finance, law, and safety-critical industries |

Conclusion

Explainable AI is not just a technical enhancement — it's a moral and regulatory necessity. As AI continues to shape our world, making it transparent ensures fairness, builds trust, and drives adoption in critical sectors.

In the coming years, businesses and researchers that prioritize explainability will have a clear competitive edge. After all, in AI, it's not enough to be smart — you also have to be understood.